Unveiling secrets: Hidden APIs for web scraping

Table of Contents:

- Introduction

- Understanding the Code

- Exploring the Inspect Element Tool

- Analyzing Network Requests

- Mimicking Requests in Code

- Using API Tools

- Dealing with Response Headers

- Working with JSON Data

- Extracting Product Information

- Creating a Pandas Data Frame

- Exporting Results to CSV

- Conclusion

Introduction

In this article, we will explore the process of scraping a website using Python code. We will dive into the concepts of inspecting element, analyzing network requests, and mimicking requests in our code. Additionally, we will discuss the usage of API tools, dealing with response headers, working with JSON data, and extracting product information. Finally, we will explore how to create a pandas data frame and export the results to a CSV file.

Understanding the Code

Before we begin, let's take a closer look at the code snippet provided. At first glance, the code may appear to require the usage of Selenium to click a button. However, by delving deeper into the code and looking beyond the aesthetics of the website, we can identify the key information that resides behind the scenes.

Exploring the Inspect Element Tool

To gather a deeper understanding of the website's structure, we will open the inspect element tool. Although this tool is commonly used to view and analyze the HTML structure, we will utilize the network tab to inspect the requests between the server and the client. This will enable us to identify any useful information that may not be readily available in the HTML itself.

Analyzing Network Requests

As we navigate through the website and click on various buttons, we can monitor the network requests that are being made. By exploring these requests, we can uncover valuable information that will assist us in our web scraping endeavors. Whether you are new to this process or have prior experience, it is crucial to carefully examine these requests to ensure we are capturing all the data we need.

Mimicking Requests in Code

Instead of relying on the pretty pictures and CSS found on the website, we want to focus on obtaining the raw data from the requests. By mimicking these requests in our code, we can extract the exact data that is being processed by the website's JavaScript. Several tools, such as Postman or Insomnia, can aid us in generating the necessary code to replicate these requests.

Using API Tools

One of the critical factors when working with web scraping is the use of API tools. These tools allow us to create, manipulate, and test different types of requests. By experimenting with different options and parameters, we can customize our requests to retrieve the specific data we require. Both Postman and Insomnia are excellent options, offering the flexibility needed for successful web scraping.

Dealing with Response Headers

When extracting data from responses, it is essential to understand the purpose of each response header. While some headers may contain critical information that we need to retain, others may not be relevant to our scraping efforts. By experimenting with the headers, we can determine which ones are necessary to include in our requests and which ones can be removed.

Working with JSON Data

JSON (JavaScript Object Notation) is a commonly used format for transmitting data. In the context of web scraping, JSON can provide a structured representation of the data we are targeting. By parsing and normalizing the JSON response, we can easily explore and manipulate the data, making it more accessible for further analysis.

Extracting Product Information

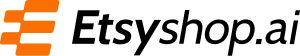

Within the JSON response, we find the product information we are interested in. By navigating through the nested structure of the JSON, we can access the list of products and retrieve the desired data. This step is crucial as it allows us to isolate and extract the specific information we need, such as product names, prices, URLs, and other relevant details.

Creating a Pandas Data Frame

To organize and manipulate the extracted data, we will create a pandas data frame. Pandas provides an efficient and convenient way to work with structured data, allowing us to analyze, transform, and export the data easily. We can normalize the JSON data and flatten the nested structure, ensuring that each piece of information resides in a dedicated column.

Exporting Results to CSV

Once we have the data organized in a pandas data frame, we can export it to a CSV file for further analysis or integration with other tools. The CSV format is widely supported and can be easily imported into various data analysis platforms or shared with colleagues. We will demonstrate a simple method for exporting the data frame to a CSV file.

Conclusion

In this article, we have explored the process of web scraping a website using Python. We have delved into the code, inspected elements, analyzed network requests, mimicked requests in our code, and utilized API tools. Additionally, we have discussed response headers, worked with JSON data, extracted product information, created a pandas data frame, and exported the results to a CSV file. By following these steps, you can gather valuable data from websites and leverage it for various purposes.

Highlights:

- Web scraping using Python code

- Inspecting network requests and mimicking them in code

- Utilizing API tools for customizing requests

- Dealing with response headers and working with JSON data

- Extracting specific product information

- Creating a pandas data frame for data organization

- Exporting the results to a CSV file

- Leveraging gathered data for analysis and other purposes

FAQ:

Q: Can I scrape any website using this method?

A: While this method can work for many websites, some may have measures in place to prevent scraping or require additional authentication. It's essential to understand the website's terms of service and respect any access restrictions.

Q: Are there any legal considerations when web scraping?

A: Yes, web scraping must be done ethically and legally. It is crucial to review the website's terms of service and comply with any applicable laws and regulations. Additionally, avoid overloading the server with excessive requests, as this can violate the website's usage policies.

Q: Can I scrape multiple pages with this method?

A: Yes, the method demonstrated in this article can be scaled to scrape multiple pages. By incorporating loops and dynamic page number retrieval, you can automate the scraping process and gather data from multiple pages.

Q: Is it possible to scrape websites with dynamic content that load via AJAX?

A: Yes, the method showcased in this article can handle websites with dynamic content that loads via AJAX. By analyzing the network requests and mimicking the necessary requests in your code, you can retrieve the data you need, even if it is loaded dynamically.

Q: Can I extract data other than product information using this method?

A: Absolutely! The method demonstrated in this article can be adapted to extract various types of data from websites. By identifying the specific data you need and locating it within the JSON response, you can extend this approach to scrape information beyond product details.